DeepLogin

Team: J. Wu, P. Ishwar, J. Konrad

Funding: National Science Foundation (CISE-SATC)

Status: Completed (2016-2018)

Summary: This project studies learning gesture “styles” (of both the hand and body) for user authentication. This is unlike both the BodyLogin and HandLogin projects, which study the use of gestures in the context of a “password”. Contrary to these two other projects, which associate each user with a single, chosen gesture motion, this project instead aims to learn a user’s gesture “style” from a set of training gestures and leverages the datasets developed in both of these prior projects.

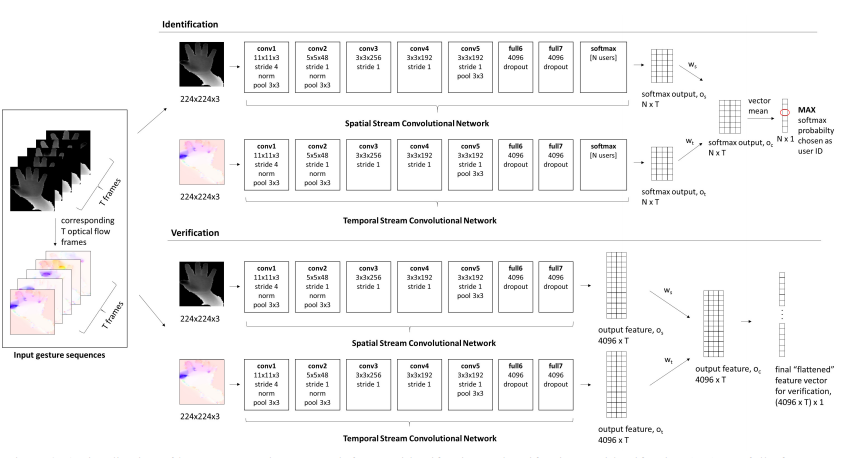

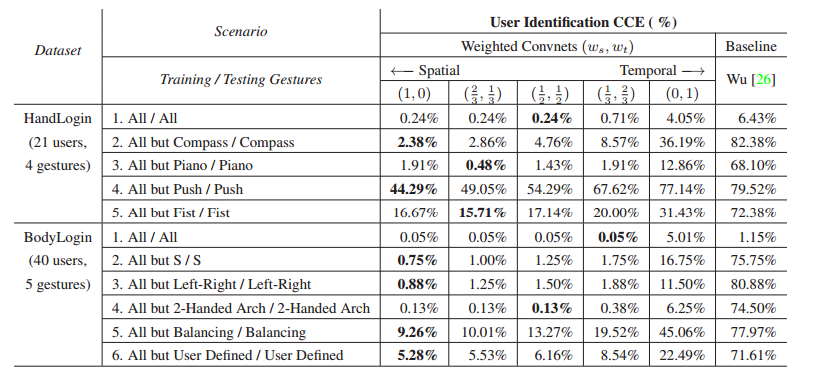

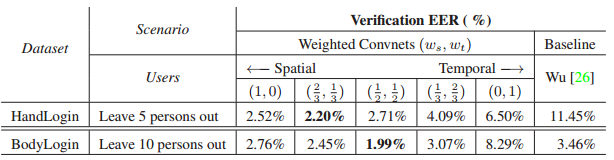

We have developed a deep learning based framework for learning gesture style, specifically, by using a two-stream convolutional neural network. We have adapted this approach for both identification and verification. We benchmarked this approach against a state-of-the-art method which uses a temporal hierarchy of covariance matrices of features extracted from a sequence of silhouette-depth frames.

Results: We have validated our approach on data extracted from both HandLogin and BodyLogin datasets.

A key practical outcome of this approach is that for authentication and identification there is no need to retrain a CNN as long as users do not use dramatically different gestures. With some degradation in performance, a similar new gesture can still be used for convenience.

The dataset and code used in this project have been made available here.

For complete results, discussion and details of our methodology, please refer to our paper below.

Publications:

- J. Wu, P. Ishwar, and J. Konrad, “Two-Stream CNNs for Gesture-Based Verification and Identification: Learning User Style,” in IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Workshop on Biometrics, June 2016.