In-the-Wild Events for People Detection and Tracking from Overhead Fisheye Cameras (WEPDTOF)

Motivation

In 2019-20, we published two people-detection datasets, HABBOF and CEPDOF, with video frames captured by overhead fisheye cameras that were annotated using rotated bounding boxes aligned with a person’s body. We also manually re-annotated a subset of the Mirror Worlds (MW) dataset with rotated bounding-box labels, that we refer to as MW-R. Although these datasets are very useful for developing and evaluating people-detection algorithms, they have been recorded in staged scenarios, where people move according to pre-defined patterns (e.g., everyone starts moving at the same time and performs similar actions). Furthermore, the variety of scenes and person identities are very limited. Therefore, the performance of state-of-the-art algorithms in naturally-occurring scenarios, to be expected in real life, remains unknown. Clearly, a challenging dataset, recorded in-the-wild, with a large variety of different scenes, actions and people is essential for further advancing this area of research.

Therefore, we introduce a new benchmark dataset of in-the-Wild Events for People Detection and Tracking from Overhead Fisheye cameras (WEPDTOF). While preparing WEPDTOF, we focused on several deficiencies of the current datasets. First, we collected in-the-wild (non-staged) videos from YouTube instead of recording our own videos with a constrained variety of people and scenarios. Second, we selected videos from a variety of indoor scenes recorded by different overhead fisheye cameras mounted at different heights. Third, we focused on videos with real-life challenges (e.g., severe occlusions, camouflage of a person by adjacent background, crowded spaces) to allow performance assessment similar to an application in practice.

Description

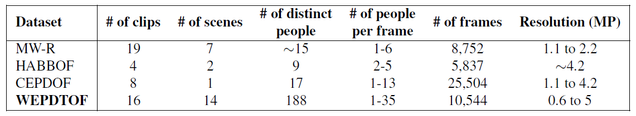

WEPDTOF has been produced at the Visual Information Processing (VIP) Laboratory at Boston University. WEPDTOF consists of 16 clips from 14 YouTube videos, each recorded in a different scene, with 1 to 35 people per frame and 188 person identities consistently labeled across time. The table below shows the statistics of MW-R, HABBOF, CEPDOF and the new WEPDTOF. Compared to the current datasets, WEPDTOF has more than 10 times the number of distinct people, approximately 3 times the maximum number of people per frame, and double the number of scenes.

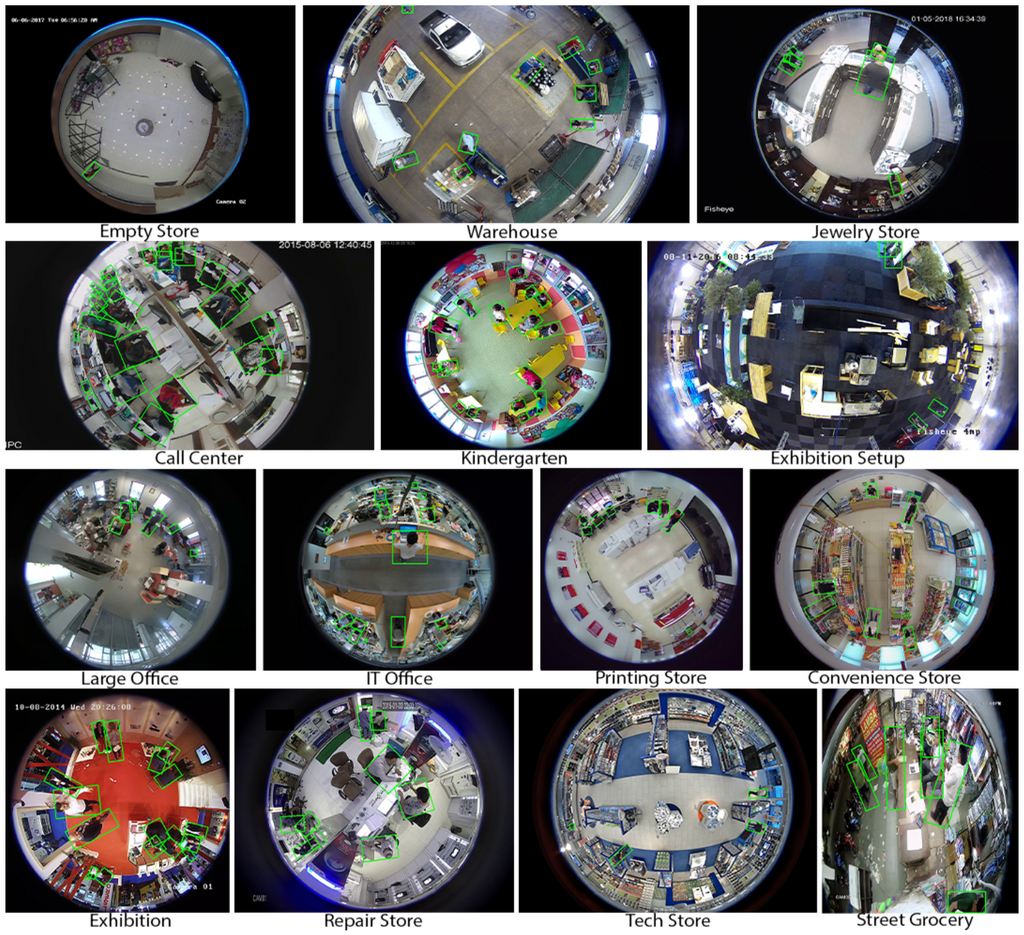

The figure below shows a sample frame from each video in WEPDTOF together with its annotations.

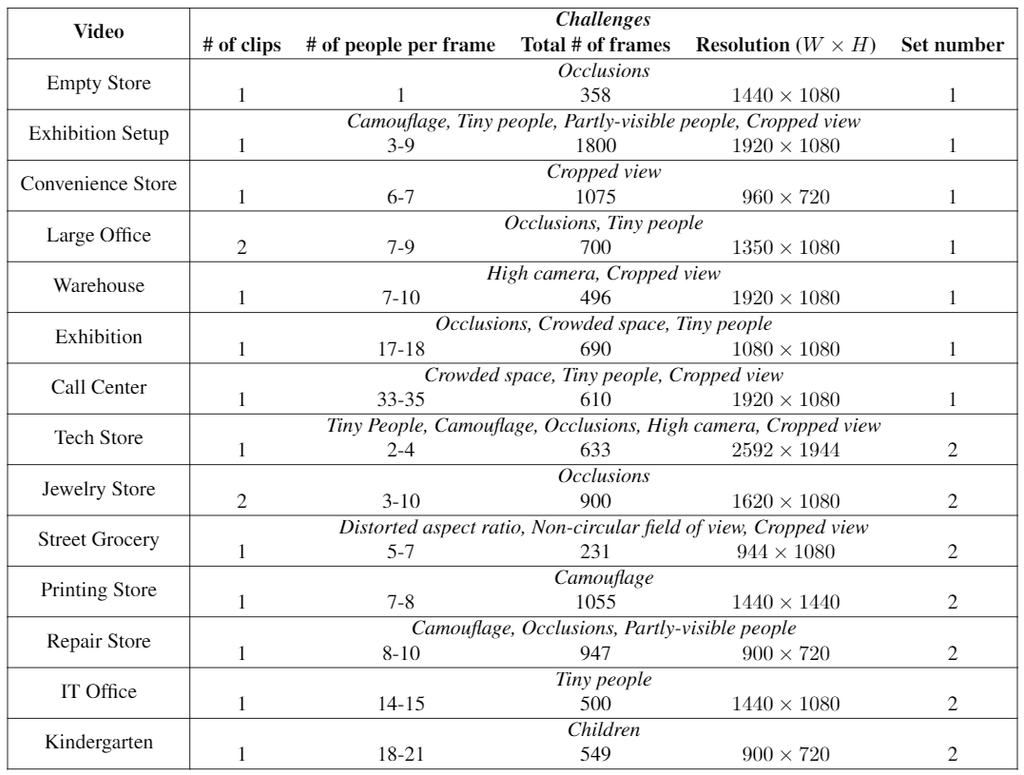

The table below presents details of each video in WEPDTOF.

The salient characteristics of WEPDTOF are discussed below.

- In-the-wild videos: Unlike the current fisheye people-detection datasets recorded in staged scenarios, the videos in WEPDTOF have been collected from YouTube and represent natural human behavior. This is important for assessing an algorithm’s performance in real-world situations.

- Variety: WEPDTOF includes 14 different videos recorded in different scenes (e.g., open office, cubicles, exhibition center, kindergarten, shopping mall). The number of people appearing in a single frame, spatial resolution and length of the videos in WEPDTOF all vary significantly. Furthermore, since the videos in WEPDTOF come from different sources, it is likely they have been captured by different camera hardware (i.e., sensor and lens), installed at different heights, working under different illumination conditions, etc.

- Real-life Challenges: WEPDTOF captures real-world challenges such as camouflage, severe occlusions, cropped field of view and geometric distortions. For example, in the “Exhibition Setup” frame shown above, it is very difficult to detect some people since the color of their clothing is very similar to the background, an effect known as camouflage that is frequently encountered in practice. On the other hand, severe occlusions are clearly visible in “Call Center”. Finally, geometric distortions manifest themselves either as a distorted aspect ratio of the images, such as in “Street Grocery”, or as a dramatically reduced bounding-box size for people at the field-of-view periphery (person far away) as seen in “IT Office”. The challenge of geometric distortions was not significantly captured in any of the previous datasets.

Annotations

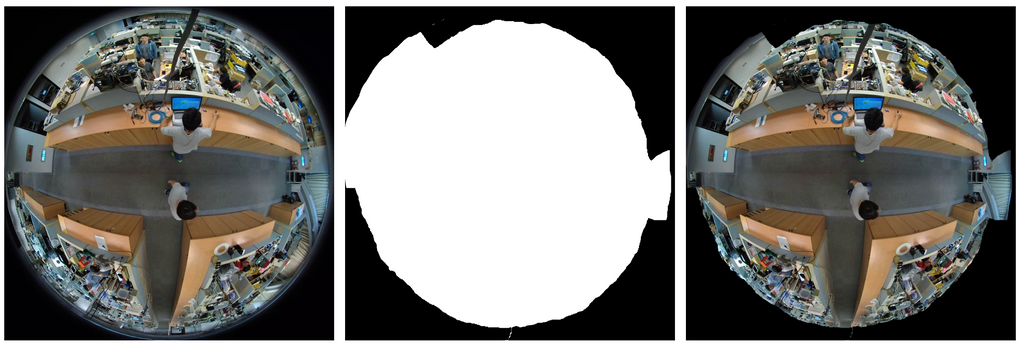

WEPDTOF annotations use the same format as the one used in the CEPDOF dataset. Therefore, the visualization and evaluation code provided with the CEPDOF dataset apply to WEPDTOF as well. To learn more about the annotation format and the software toolkit, please refer to CEPDOF dataset page. In WEPDTOF annotations, we exclude some of the areas that are close to the field-of-view periphery since people appear very small and close to each other making it nearly impossible to annotate accurately. These excluded regions are identified by means of a binary region of interest (ROI) map for each video. The figure below shows an example of ROI for “IT Office”.

Left: Sample frame from “IT Office”; Center: ROI map for this frame; Right: The same frame shown within its ROI map.

Similarly to CEPDOF and MW-R, bounding-box annotations of WEPDTOF are temporally consistent, that is bounding boxes of the same person carry the same ID in consecutive frames. Therefore, WEPDTOF can be used not only for people detection from overhead fisheye cameras but also for tracking and re-identification.

The video below shows short annotated clips from all videos in WEPDTOF.

Dataset Download

You may use this dataset for non-commercial purposes. If you publish any work reporting results using this dataset, please cite the following paper:

M.O. Tezcan, Z. Duan, M. Cokbas, P. Ishwar, and J. Konrad, “WEPDTOF: A dataset and benchmark algorithms for in-the-wild people detection and tracking from overhead fisheye cameras” in Proc. IEEE/CVF Winter Conf. on Applications of Computer Vision (WACV), 2022.

To access the download page, please complete the form below including the captcha.

WEPDTOF Download Form

Contact

Please contact [mtezcan] at [bu] dot [edu] if you have any questions.

Acknowledgements

The development of this dataset was supported in part by the Advanced Research Projects Agency – Energy (ARPA-E), within the Department of Energy, under agreement DE-AR0000944.

We would also like to thank Boston University students for their participation in the annotation of our dataset.