GestureMouse

Team: K. Lai, K. Guo, J. Konrad, P. Ishwar

Funding: Kenneth R. Lutchen Distinguished Fellowship Program, College of Engineering

Status: Completed (2011-2013)

Background: In November 2010 Microsoft launched Kinect for Xbox, an infrared-light, range-sensing camera with only one Kinect-compatible game. A suicide or smart strategy? The Kinect turned out to be an immediate hit and countless hackers as well as academicians started exploring its potential. When in June 2011 Microsoft released a Kinect Software Development Kit (SDK), which includes a set of powerful algorithms for extracting scene depth and body silhouettes, and subsequently building a skeleton model of a person in real time, the floodgates opened.

Although Kinect’s target application is video gaming, the 3-D capture and robustness of its SDK have helped spawn numerous research and “hacking” projects in the field of human-computer interfaces. Most approaches, however, rely on tracking not recognition. Usually a set of parameters, e.g., thresholds on joint locations, velocities and accelerations, are specified to localize and track movements. For example, a number of applications have been developed using USC’s Flexible Action and Articulated Skeleton Toolkit (FAAST). Although many approaches have been shown to work well in scripted scenarios (impressive videos on YouTube), one would expect difficulties when users with very different gait are considered. Furthermore, using a fixed set of parameters (thresholds) makes the extension to new gestures difficult. We believe that gesture recognition based on machine learning algorithms with suitably selected features and representations are likely to be more flexible and robust.

Summary: We developed a real-time human-computer interface that uses recognition of hand gestures captured by a Kinect camera. Gestures can be either predefined or the system can be trained by the user with his/her own gestures. The interface works reliably in real time on a modest PC. However, since the Kinect uses infrared light sources and has a reliable operating range from about 0.8m to 4m, our system works well only in indoor environments without very strong lighting. The developed algorithms are supervised in the sense that we use a dictionary of labeled hand gestures against which we compare a query gesture.

We use Kinect’s SDK to extract a time sequence of skeleton joints that together form a “bag of features”. We capture the statistics of these features in an empirical covariance matrix and, since a covariance matrix does not lie in a Euclidean space, we transform it to such a space by taking the matrix logarithm. For efficiency, we use the nearest-neighbor classifier with a metric given by the Frobenius norm of the difference between log-covariance matrices. The covariance matrix, matrix logarithm, and distance metric calculations are of low-enough computational complexity that for hand-gesture dictionaries of modest size they can be computed in real time (30 frames/sec) on a modest computer.

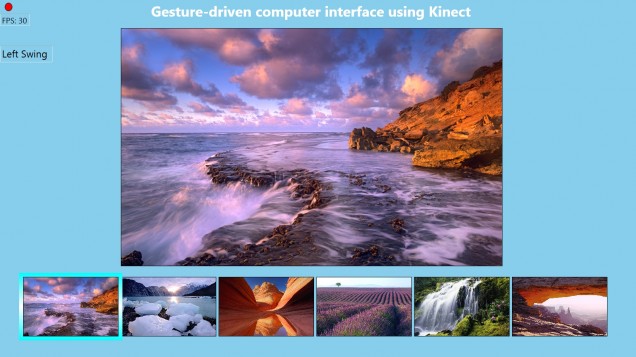

Results: In a leave-one-out cross-validation test (LOOCV) using 8 hand gestures performed by 20 individuals our method attains over 97% correct-classification rate (CCR) when removing all gestures of a user and over 99% CCR when removing one realization (video) of a gesture for a user. In practice, the algorithm works very well on new users users who have not been used for training and requires no tuning. Based on this algorithm, we developed GestureMouse, a complete real-time system in C#, that permits a user to:

- move icons to left by swinging the right arm to left,

- move icons to right by swinging the left arm to right,

- select icons (down one level) by jabbing at the screen with either left or right arm,

- go up one level by executing “come back” gesture with either arm,

- zoom-in by diagonally moving two hands outwards,

- zooming-out by horizontally moving two hands inwards.

GestureMouse has been accepted for Kinect demonstration at CVPR’12 in June 2012.

Publications:

- K. Guo, P. Ishwar, and J. Konrad, “Action recognition from video by covariance matching of silhouette tunnels,” in Proc. Brazilian Symp. on Computer Graphics and Image Proc., pp. 299-306, Oct. 2009.

- K. Guo, P. Ishwar, and J. Konrad, “Action recognition using sparse representation on covariance manifolds of optical flow,” in Proc. IEEE Int. Conf. Advanced Video and Signal-Based Surveillance, pp. 188-195, Aug. 2010, AVSS 2010 Best Paper Award.

- K. Guo, P. Ishwar, and J. Konrad, “Action recognition in video by sparse representation on covariance manifolds of silhouette tunnels,” in Proc. Int. Conf. Pattern Recognition (Semantic Description of Human Activities Contest), Aug. 2010, [SDHA contest web site], Winner of Aerial View Activity Classification Challenge.

- K. Lai, J. Konrad, and P. Ishwar, “A gesture-driven computer interface using Kinect camera,” in Proc. Southwest Symposium on Image Analysis and Interpretation, Apr. 2012.