Action Recognition Using Log-Covariance Matrices

Team: K. Guo, P. Ishwar, J. Konrad

Funding: National Science Foundation (CISE-SNC-NOSS)

Status: Completed (2007-2013)

Background: A 5-year old child has no problem recognizing different types of human actions, such as, walking, running, jumping, waving, etc., from video footage captured by a surveillance camera. Give the video footage to a computer, however, and soon obstacles emerge. One source of difficulty is that different actions, such as walking and running, may sometimes look very similar due to the viewing-angle and the camera frame-rate. Another problem, which makes the task challenging, is action variability: the same action performed by different people may look quite different, e.g., people have different walking gaits. Although this problem has been tackled with limited success over the last decade or so, when storage and processing resources are severely limited and decisions need to be made in real time, no satisfactory system has been developed to date. There are many application areas that could greatly benefit from a fast, accurate, and robust solution: homeland security (e.g., to detect thefts and assaults), healthcare (e.g., to monitor the actions of elderly patients and detect life-threatening symptoms), ecological monitoring (e.g., to analyze the behavior of animals in their natural habitats), and automatic sign-language recognition for assisting the speech-impaired.

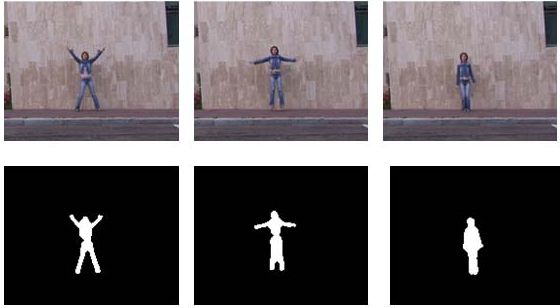

Summary: In this project, a new class of action recognition algorithms has been developed with performance on par or better than that of of state-of-the-art methods. Furthermore, the proposed algorithms have low storage and computational requirements thus making them suitable for real-time implementation. Central to the developed framework is the use of covariance-matrix representation to characterize actions in a video, and leading to a family of algorithms based on different features and classification methods. In one of the early variants of the approach, background subtraction is applied to all video frames in order to extract moving object’s silhouette tunnel. The tunnel’s shape, that captures action dynamics, is then described by a covariance matrix computed from 13-dimensional feature vectors. Combined with the nearest neighbor classification, this early approach produced very promising results [1].

Marrying the covariance-matrix representation with a recently developed classification framework based on sparse linear representation, related to compressive sampling, has lead to improved action recognition accuracy. The covariance matrix representation used is discriminative but also simple to compute and has low storage requirements. However, in order to perform an effective classification the nonlinear action representation needs to be mapped into a linear space by means of log-covariance. The resulting method [3] consistently achieved such a high performance on several databases that the authors were invited to enter the “Aerial View Activity Classification Challenge” in the Semantic Description of Human Actions (SDHA) contest at the 2010 International Conference on Pattern Recognition (ICPR). The goal of the challenge was to test methodologies with realistic surveillance-type videos particularly from low-resolution far-away cameras. Eight teams entered the contest, and in the final stage the BU team “BU Action Covariance Manifolds” won the contest by edging an Italian team from the University of Modena.

The very same framework was also used with another set of features, namely optical flow instead of silhouette tunnels. 12-dimensional feature vectors combining optical flow with its gradient, divergence, vorticity, etc. were computed and aggregated in a covariance matrix. The resulting matrix was used as action representation in the same sparse-linear classification with excellent performance at low memory requirements and low computational cost [4]. The elegance of the method and its excellent performance have been recognized at the at the 7th IEEE International Conference on Advanced Video and Signal-Based Surveillance where the method won the best paper award.

Although the above methods proved successful, they all rely on the knowledge of action boundaries, i.e., when actions start and stop. However, a surveillance camera produces continuous video that includes different types of actions following one another. In order to find action boundaries, we developed a non-parametric statistical framework to learn the distribution of the distance between covariance descriptors [2]. Action changes are then detected as covariance-distance outliers.

Results: The proposed action recognition algorithms were tested on various databases, including the Weizmann Human Action Database (see an example in the figure above), KTH database, and the recent UT-Tower dataset and YouTube dataset. The processing of individual videos was performed using overlapping segments. The correct classification rate (CCR) for leave-one-out-cross-validation (LOOCV) attained varies between different datasets. On the Weizmann dataset a CCR up to 100% has been attained, on the KTH dataset – up to 98.5%, on the UT-Tower low-resolution video – up to 97.2%, and on the YouTube dataset – up to 78.5%. Detailed results can be found in the papers below. It is very important to note that all of the proposed algorithms are lightweight in terms of memory and CPU requirements, and have been implemented to run at video rates on a modern CPU under Matlab.

Promising results have been also obtained for the proposed action change detection. In a ground-truth experiment, where a video sequence was constructed by concatenating different actions by the same individual (61 action changes), a false negative error of 1.64% and false positive error of 0.19% have been attained.

The above framework has three important traits: conceptual simplicity, state-of-the-art performance, and low computational complexity. Its relative simplicity, as compared to some of the top methods in the literature, allows a rapid deployment of robust action recognition operating in real time. This opens new application areas outside the surveillance/security arena, for example in sports video annotation and human-computer interaction. The videos below show three examples of the application of our action recognition framework to the automatic annotation of sports videos. First, we created a dictionary of manually-annotated short video segments (e.g., forehand, backhand) based on 2-3 minutes of sample tennis or pommel-horse videos. Then, we automatically classified each frame of a new video using optical flow and nearest-neighbor classifier with respect to the dictionary; each frame was labeled based on a majority vote from all overlapping video segments that include that frame. The detected actions are denoted by yellow and red dots on the screen (see the legends below).

Tennis example 1: red dot = serve, yellow dot = side on which player hits the ball (forehand versus backhand).

Tennis example 2: red dot = serve, yellow dot = side on which player hits the ball (forehand versus backhand).

Pommel horse example: left dot = spindle on back, middle dot = spindle on chest, right dot = scissors

Publications:

[1] K. Guo, P. Ishwar, and J. Konrad, “Action recognition from video by covariance matching of silhouette tunnels,” in Proc. Brazilian Symp. on Computer Graphics and Image Proc., pp. 299-306, Oct. 2009.

[2] K. Guo, P. Ishwar, and J. Konrad, “Action change detection in video by covariance matching of silhouette tunnels,” in Proc. IEEE Int. Conf. Acoustics Speech Signal Processing, pp. 1110-1113, Mar. 2010.

[3] K. Guo, P. Ishwar, and J. Konrad, “Action recognition in video by sparse representation on covariance manifolds of silhouette tunnels,” in Proc. Int. Conf. Pattern Recognition (Semantic Description of Human Activities Contest), Aug. 2010, [SDHA contest web site], Winner of Aerial View Activity Classification Challenge.

[4] K. Guo, P. Ishwar, and J. Konrad, “Action recognition using sparse representation on covariance manifolds of optical flow,” in Proc. IEEE Int. Conf. Advanced Video and Signal-Based Surveillance, pp. 188-195, Aug. 2010, AVSS 2010 Best Paper Award.

[5] K. Guo, Action recognition using log-covariance matrices of silhouette and optical-flow features. PhD thesis, Boston University, Sept. 2011.

[6] K. Guo, P. Ishwar, and J. Konrad, “Action Recognition from Video using Feature Covariance Matrices,” IEEE Transactions on Image Processing, vol. 22, no. 6, pp. 2479-2494, Jun. 2013.

Matlab Source Code:

Thank you for your interest. For questions, comments, and bugs related to the code, please send email to Dr. Kai Guo <kaiguo@bu.edu> copying Profs. Ishwar and Konrad. In your email, please indicate your full name and affiliation, whether you are a faculty member, researcher, or a student, and whether you are already using or planning to use the code and its intended purpose. Please note that while we will make every effort to respond, sometimes we may be slow in replying.

If you publish any work reporting results using the code below, please cite the following paper:

K. Guo, P. Ishwar, and J. Konrad, “Action Recognition from Video using Feature Covariance Matrices,” IEEE Transactions on Image Processing, vol. 22, no. 6, pp. 2479-2494, Jun. 2013.

Open-source copyright notice:

Copyright (c) 2011, Kai Guo, Prakash Ishwar, and Janusz Konrad. Allrights reserved.

Redistribution and use in source and binary forms, with or withoutmodification, are permitted provided that the following conditions are met:

- Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

- Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS”AS IS” AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOTLIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FORA PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHTHOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL,SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOTLIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT(INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USEOF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

Link to WinRAR archive file containing Matlab source code: