Rotated Bounding-Box Annotations for Mirror Worlds Dataset (MW-R)

Motivation

Mirror Worlds (MW) Challenge is a project at Virginia Tech’s Institute for Creativity, Arts and Technology that provides a top-view fisheye image dataset with 30 videos and 13k frames overall. The dataset is very useful for research on people detection from overhead fisheye cameras. However, all person objects in the videos are annotated with axis-aligned bounding boxes. Such annotations are, in general, accurate for standing people, however, for other poses and body orientations (e.g., a person stretching on a chair with body orthogonal to the field-of-view radius) axis-aligned bounding boxes usually do not fit tightly around people. In order to account for such body poses, our recent research has been focused on detecting people using rotated bounding boxes. To evaluate our algorithms on the MW dataset and to provide more resources for the research community, we manually annotated a subset of the MW dataset with rotated bounding-box labels, that we refer to as MW-R. Similarly to our own CEPDOF dataset, MW-R is annotated spatio-temporally, that is bounding boxes of the same person carry the same ID in consecutive frames, and thus can be also used for additional vision tasks using overhead, fisheye images, such as video-object tracking and human re-identification.

Description

The MW-R dataset has been developed at the Visual Information Processing (VIP) Laboratory at Boston University and published in April 2020. MW-R includes only the Train Set of the original MW dataset, which contains 19 videos, 8572 frames, and 23k person objects. More detailed information about the videos and frames can be found on the original Mirrow Worlds website.

Data Format

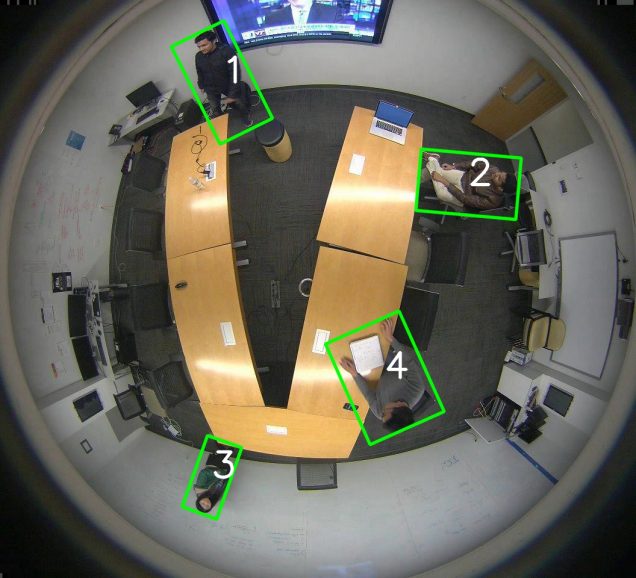

MW-R annotations use the same format as those in CEPDOF dataset. Therefore, the visualization and evaluation code provided with CEPDOF datset apply to MW-R dataset. To learn more about the annotation format and the software toolkit, please refer to CEPDOF dataset page. An example annotation from MW-R superimposed on the original image is shown below.

Dataset Download Instructions

Please follow these steps to use our annotations along with the original MW frames.

- Download the videos: Go to the MW dataset website, navigate to the bottom of the page, and click “Get Raw Videos (1.7G)”. In case the link is expired, please contact the MW authors.

- Convert videos to frames: Convert the MW Train Set videos into frames. Please check that the “MW-18Mar-2” video contains 297 frames, the “MW-18Mar-3” video contains 788 frames, and each of the other videos contains 451 frames. In total, there should be 297 + 788 + 17*451 = 8752 frames.

- Rename the frames: to use our annotations, please rename the MW frames using the following file names: “Mar#_******.jpg”, where # is the video number as in the original MW dataset, and ****** is the frame number in that video but zero-padded to 6 digits. For example, the first frame of the “MW-18Mar-3” video should be “Mar3_000001.jpg”, and the 10th frame of the “MW-18Mar-12” video should be “Mar12_000010.jpg”.

- Download the annotations: Fill the download form below, download our annotations, and unpack the zip file. Put all the MW frames in our annotation folder if you like. Now, you have both the MW frames and our annotations.

- Visualization and evaluation (optional but highly recommended): As mentioned above, MW-R annotations use exactly the same format as those in the CEPDOF dataset. To visualize bounding boxes and evaluate algorithms, please use the software toolkit that we provide in the CEPDOF dataset page.

Annotation Download

You may use our annotations for non-commercial purposes. If you publish any work reporting results using the MW dataset and our annotations, please cite the original MW dataset and the following paper:

- Z. Duan, M.O. Tezcan, H. Nakamura, P. Ishwar and J. Konrad, “RAPiD: Rotation-aware people detection in overhead fisheye images”, in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Omnidirectional Computer Vision in Research and Industry (OmniCV) Workshop, June 2020.

To access the download page, please complete the form below.

MW-R Download Form

Contact

Please contact [mtezcan] at [bu] dot [edu] if you have any questions.

Acknowledgements

The development of these annotations was supported in part by the Advanced Research Projects Agency – Energy (ARPA-E), within the Department of Energy, under agreement DE-AR0000944.

We would also like to thank Boston University students for their participation in the annotation of the MW dataset.