BodyLogin Datasets

Motivation

The problems of gesture recognition and gesture-based authentication are similar in the sense that they both involve users performing gestures. However, in the former problem the goal is to recognize the gesture regardless of the user, whereas in the latter problem the goal is to recognize the user regardless of the gesture. Although it might seem that a given dataset of gestures can be used interchangeably for studying both problems, e.g., analyzing user authentication performance using a gesture recognition dataset, this is not the case. Datasets for gesture recognition are typically gesture-centric meaning that they have high number of gestures per user (many gestures to classify, few users performing them) whereas studying authentication requires the opposite, namely a user-centric dataset which has a high number of users per gesture. With this in mind, we have captured three datasets, each focusing on a different aspect of gesture-based authentication.

BodyLogin Dataset: Multiview (BLD-M)

Presented at Workshop on Biometrics at CVPR 2014

BLD-M is a multiple-viewpoint Kinect dataset that contains gestures recorded under various degradations. Data is provided in the form of unprocessed skeletal joint coordinates. This dataset was used to evaluate the value of additional Kinect viewpoints in gesture authentication [1].

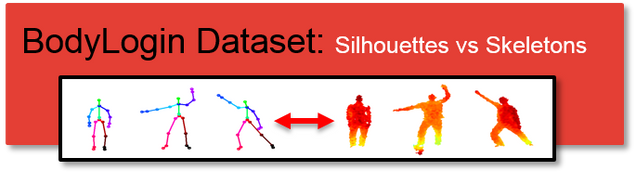

BodyLogin Dataset: Silhouettes vs Skeletons (BLD-SS)

Presented at AVSS 2014

BLD-SS is a Kinect dataset that contains gestures recorded under various degradations. Data is provided in two forms: unprocessed skeletal joint coordinates and depth maps. This dataset was used to compare the performance of silhouette and skeletal features in “real-world” scenarios [2].

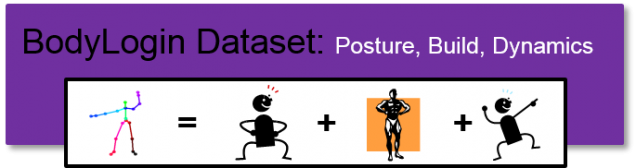

BodyLogin Dataset: Posture, Build and Dynamics (BLD-PBD)

Presented at IJCB 2014

BLD-PBD is a Kinect dataset that contains gestures across two sessions. In the first session, users recorded gestures under normal conditions. In the second session, users were matched to attack targets and were made to spoof another’s gestures. Data is provided as unprocessed skeletal joint coordinates. This dataset was used to evaluate the effects of user-specific posture, build and dynamics in the context of authentication [3].

Publications

- J. Wu, J. Konrad, and P. Ishwar, “The value of multiple viewpoints in gesture-based user authentication,” in IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Workshop on Biometrics, pp. 90-97, June 2014.

- J. Wu, P. Ishwar, and J. Konrad, “Silhouettes versus skeletons in gesture-based authentication with Kinect,” in Proc. IEEE Int. Conf. Advanced Video and Signal-Based Surveillance (AVSS), pp. 99-106, Aug. 2014.

- J. Wu, P. Ishwar, and J. Konrad, “The value of posture, build and dynamics in gesture based user authentication,” inInt. Joint Conf. on Biometrics (IJCB), pp. 1-8, Oct. 2014.