HandLogin

Team: J.Wu, J. Christianson, J. Konrad, P. Ishwar

Funding: National Science Foundation (CISE-SATC)

Status: Completed (2014-2017)

Summary: This project studies using intentional in-air hand gestures for user authentication. This is in contrast to the use of full body motion that we have studied for user authentication in our BodyLogin project. We focus on hand gestures that have been captured by a depth sensor, specifically the Kinect v2, due to its predecessor’s ubiquity. Although an extensive body of work exists on hand-gesture recognition using depth sensors, such as the Kinect, there has been little work on authentication so far.

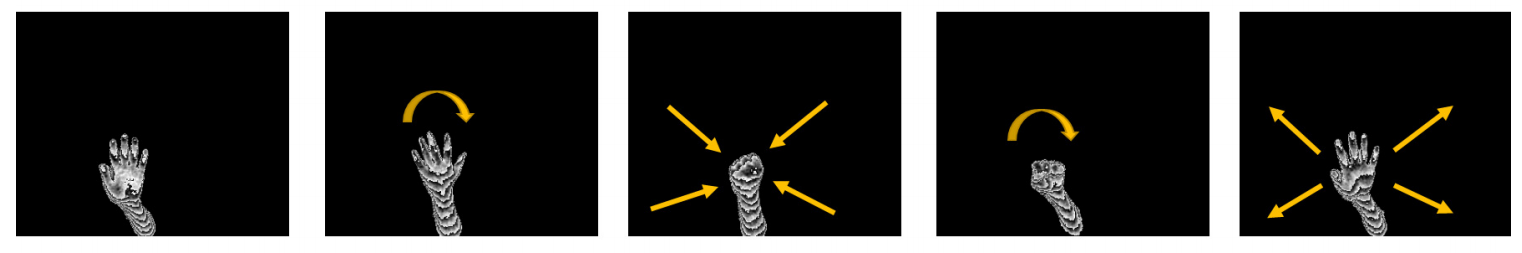

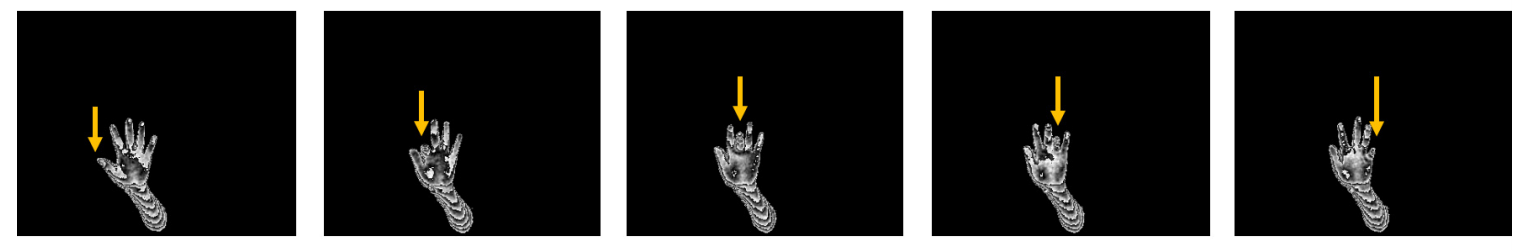

We have developed an approach to in-air hand-gesture authentication using a temporal hierarchy of covariance matrices of features extracted from a sequence of silhouette-depth frames. We have studied 4 different gestures; examples of silhouette-depth frames for “Flipping fist” and “Piano” gestures are shown above.

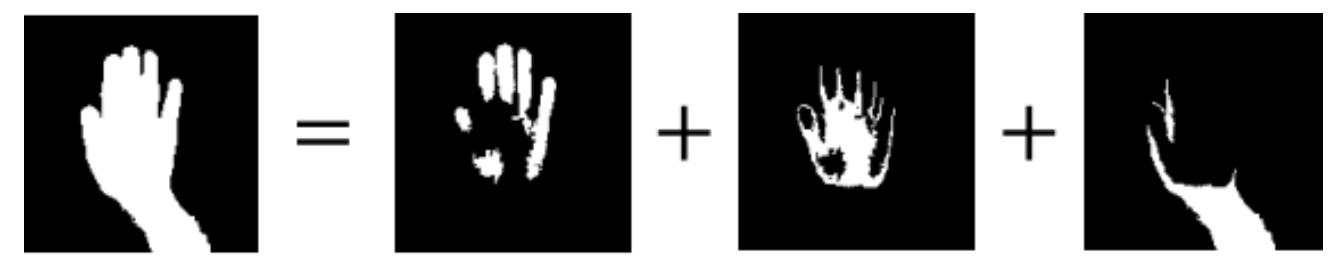

Unlike previous approaches, we incorporate hand shape (lost in “signature”-type approaches using only the dynamics), and explicitly use depth-information (additionally useful when hand pose-estimates are not reliable or available) by segmenting the overall hand silhouette into 3 “sub”-silhouettes (see the figure above).

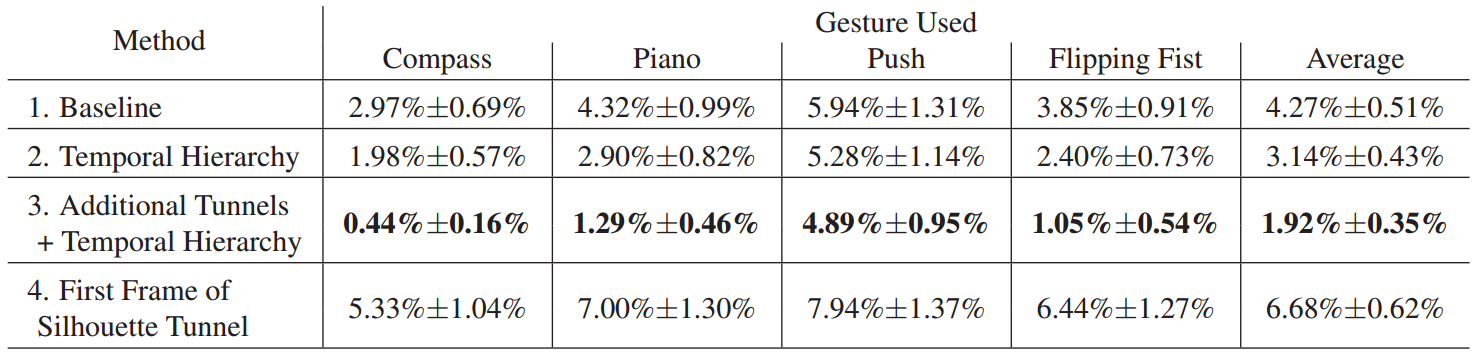

Results: We have validated our results (shown in the table below) on a hand gesture dataset collected across 21 users and 4 predefined hand gestures. In our experiments, we focused on extracting gesture information purely from depth data alone – we do not use RGB information.

The dataset and code used in this project have been made available here.

For additional results and details on our methodology, please refer to our paper below.

Publications:

- J. Wu, J. Christianson, J. Konrad, and P. Ishwar, “Leveraging shape and depth in user authentication from in-air hand gestures,” in Proc. IEEE Int. Conf. Image Processing (ICIP), pp. 3195-3199, Sept. 2015.