People counting using overhead fisheye cameras

Team: Shengye Li, M. Ozan Tezcan, Prakash Ishwar, Janusz Konrad

Funding: Advanced Research Projects Agency – Energy (ARPA-E)

Status: Completed (2018-2019)

Background: Counting people in a space with widely-varying occupancy level (e.g., classroom, dining hall) is important from the standpoint of energy consumption and occupant comfort. If a room is occupied at 50% only, then HVAC (heating, ventilation and air conditioning) system should be adjusted to provide a suitable amount of air without over-cooling or over-heating. This in turn would result in energy savings and improved occupant comfort. To achieve this goal, the number of occupants in a space needs to be known.

Summary: We propose to use overhead, fisheye cameras (also known as panoramic or 360-degree cameras) for people counting. As opposed to standard cameras, fisheye cameras offer a large field of view while their overhead mounting reduces occlusions. However, methods developed for standard cameras perform poorly on fisheye images since they do not account for the radial image geometry (people appear at various orientations), while no large-scale fisheye-image datasets with radially-aligned bounding box annotations are available for training novel algorithms. Therefore, our approach is to use an existing deep-learning object-detection method, but restricted to humans, in combination with clever pre- and post-processing to accomplish people counting. The consequence is that no re-training of the object-detection method on fisheye images is needed.

Technical Approach: We adapted YOLOv3 deep learning model trained on standard images for people counting in fisheye images. In one method, called activity-blind (AB), YOLOv3 is applied to 24 rotated, overlapping focus windows covering the whole fisheye image and the results are post-processed to produce a people count. In another method, called activity-aware (AA), YOLOv3 is applied only to focus windows derived from background subtraction and post-processed in the same way. For typical cases with few people, this significantly speeds up the execution with minimal impact on performance.

HABBOF Dataset: To evaluate the performance of both algorithms, we collected a new dataset. Although there exist public people-detection datasets for fisheye images, they are annotated either by point location of a person’s head or by a bounding box aligned with image boundaries. However, due to radial geometry of fisheye images, people standing under overhead fisheye camera appear radially-aligned and so should be their ground-truth bounding boxes. Since our algorithms do produce radially-aligned bounding boxes, we cannot use existing datasets. Therefore, we collected and annotated a new dataset called Human-Aligned Bounding Boxes from Overhead Fisheye cameras (HABBOF) that is available for download below.

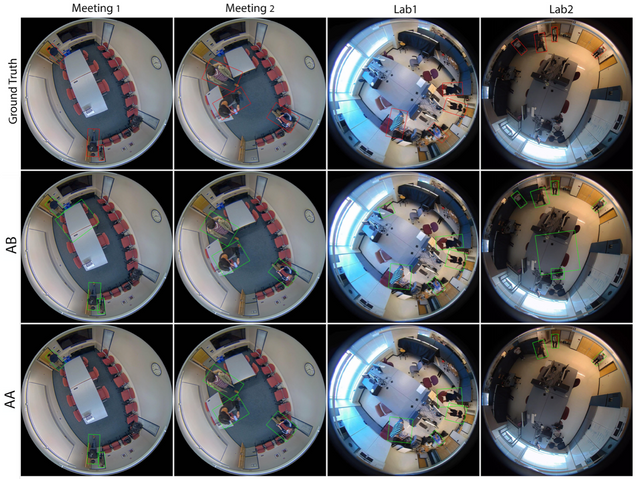

Experimental Results: In order to confirm effectiveness of the proposed methods, we conducted experiments on the HABBOF dataset. Figure below shows results for one sample frame from each of the four fisheye video sequences from this dataset: ground truth, results of our activity-blind method and of our activity-aware method.

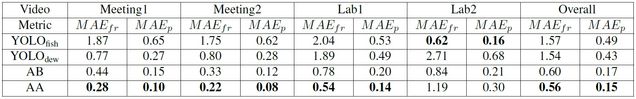

Further, we performed a quantitative comparison of the proposed methods with two baseline methods:

- YOLO_fish: The YOLOv3 algorithm is applied directly to fisheye images to detect bounding boxes of people, followed by the same post-processing steps as in the proposed algorithms. The obtained bounding boxes are rotated to align them radially. This baseline method is expected to be successful in the upper part of of a fisheye image, where people are upright, but should be sub-par in the lower part.

- YOLO_dew: The YOLOv3 algorithm is applied to de-warped fisheye images (panoramic), followed by the same post-processing steps as in the proposed methods. The coordinates of the final bounding boxes are then re-warped back to the fisheye domain. Since dewarping creates severe distortion in image center and at its periphery, the method is expected to perform well only in the middle region.

Two tables below show quantitative performance metrics for people counting and people detection for the proposd and baseline algorithms. In terms of people-counting performance, the activity-blind method has lower MAE (on average across all videos) than both baseline algorithms by over 60%. The activity-aware method’s MAE is lower by additional 6–11%. These results clearly demonstrate that the series of pre- and post-processing steps enable YOLO to be effective in people counting from overhead fisheye images despite the fact that YOLO is not trained on fisheye images.

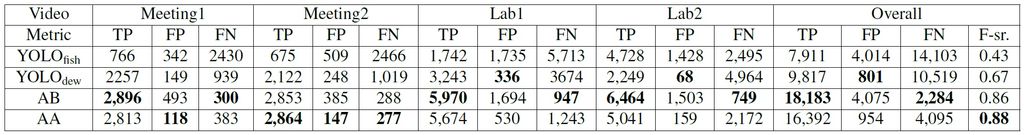

In terms of people-detection performance, the proposed methods have larger TP and lower FN values than both baseline methods. Both proposed methods also achieve a higher overall F-score by over 0.2 compared to the baseline algorithms. Although the AB method detects more people (higher TP value) than the AA method, it has also a higher FP value. This is caused by the fact that most people appear within a single focus window in the AA method (focus window is constructed around detected changes). However, even if a person is in the window’s center, he/she may not be exactly upright and the detection by YOLOv3 may fail. In contrast, in the AB method a person appears in several neighboring focus windows, in each at a slightly different angle and in some with a fully-visible body, so that there are more chances for detection. Therefore, the activity-blind method outperforms the activity-aware method in terms of TP and FN and, consequently, has a higher Recall. On the other hand, the AB method has more FPs than the AA method, since the AA method implements YOLO only on selected windows containing ROIs, and this reduces the chance of making an erroneous detection.

Source Code: The source code for this work is available for download from the link to HABBOF dataset above.

Publications:

- S. Li, M.O. Tezcan. P. Ishwar, and J. Konrad, “Supervised people counting using an overhead fisheye camera”, in Proc. IEEE Intern. Conf. on Advanced Visual and Signal-Based Surveillance (AVSS), Sep. 2019.