Geometry-Based Person Re-Identification in Fisheye Stereo

Team: Joshua Bone, Mertcan Cokbas, Ozan Tezcan, Janusz Konrad, Prakash Ishwar

Funding: Advanced Research Projects Agency – Energy (ARPA-E)

Status: Completed (2020-2022)

Background: In order to count the number of people in a very large space, multiple overhead fisheye cameras are needed. However, detecting people in each camera’s field of view (FOV) and adding the counts is likely to lead to overcounting since a person may visible in FOV of several cameras. In order to obtain an accurate occupancy estimate, person re-identification (PRID) is needed so only unique counts are considered.

Summary: The type of PRID needed to accurately count people from multiple overhead fisheye cameras is different from the typical PRID studied to date. In the case considered here, a person can appear at most once in each camera’s FOV (the person may be occluded and not appear at all). In order to detect if a person is visible in FOV’s of two cameras, we treat all detections (identities) in one FOV as the query set and those in the other FOV as the gallery set. Therefore, for each query identity at most a single match can be found in the gallery set (or no match at all if occlusion takes place). However, in a typical PRID studied to-date for side-mounted rectilinear cameras, there may be multiple occurrences of a query identity in the gallery set. Furthermore, rectilinear-camera PRID relies on the appearance of people to perform matching. This is often unreliable in large spaces monitored by overhead fisheye cameras since the view angle and size of a person dramatically differ resulting in very different appearances and bounding-box resolutions between cameras. Therefore, in this paper we propose to use person’s location in each FOV to perform a match (since only one person can occupy a 3-D location in a room).

Technical Approach: The main idea is that a person visible in two FOVs is uniquely located in the FOV of one camera given their height and location in the other FOV. We developed a height-dependent mathematical relationship between these locations

using the unified spherical model for omnidirectional cameras. We also proposed a new fisheye-camera calibration method and a novel automated approach to calibration data collection. Finally, we proposed four re-identification algorithms that leverage geometric constraints and demonstrate their re-identification accuracy, which vastly exceeds that of a state-of-the-art appearance-based method, on a fisheye-camera dataset we collected.

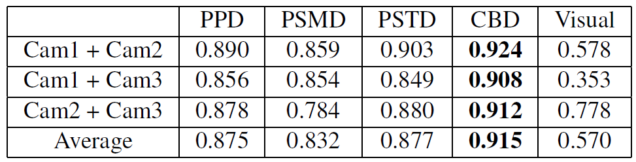

Experimental Results: The table below shows the accuracy (measured by the Query Matching Score or QMS – see the paper below for definition) for 4 geometry-based PRID algorithms and a state-of-the-art appearance-based algorithm.

The appearance-based (visual) algorithm uses ResNet-50 and cosine similarity followed by greedy matching. The geometry-based algorithms use 4 different location-based metrics to tell how likely is a match between query and gallery elements:

- PPD (point-to-point distance): using an average person’s height (170cm) a query person’s location is predicted in the gallery image; the distances between all predicted query locations and all gallery locations form a distance matrix that is used by the same greedy algorithm as in visual PRID to complete matching.

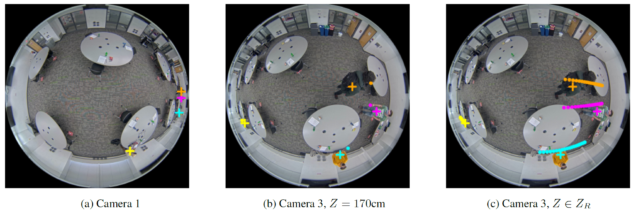

- PSMD (point-to-set minimum distance): using 21 heights (128cm, 132cm, …, 208cm ), 21 locations are predicted in the gallery image for each query location (see the figure below for exampls); the minimum distance between this set of 21 predicted query locations and each gallery location contributes one entry to the distance matrix that is processed by the same greedy algorithm.

- PSTD (point-to-set total distance): in this case, like in PSMD, a set of of 21 predicted query locations is constructed but instead of computing the minimum distance between this set and each gallery location, the total distance is computed and contributes one entry to the distance matrix (followed by the same greedy algorithm).

- CBD (count-based distance): as in PSMD and PSTD, a set of 21 predicted query locations is constructed but instead of computing distances a form of voting is conducted (see the paper below for details).

Clearly, all geometry-based algorithms significantly outperform the appearance-based (visual) method in this type of PRID. This is not surprising since people dramatically differ in size and viewpoint between different cameras. While the CBD-based algorithm slightly outperforms the other algorithms, it is also most complex computationally. The PPD-based algorithm is least-complex computationally and yet provides a very good performance (larger value is better).

Publications:

- J. Bone, M. Cokbas, O. Tezcan, J. Konrad, and P. Ishwar,“Geometry-based person re-identification in fisheye stereo,” in Proc. IEEE Int. Conf. Advanced Video and Signal-Based Surveillance, Nov. 2021.