Privacy-Preserving Localization via Active Scene Illumination

Team: J. Zhao, N. Frumkin, J. Konrad, P. Ishwar

Funding: National Science Foundation (Lighting Enabled Systems and Applications ERC)

Status: Ongoing (2017-…)

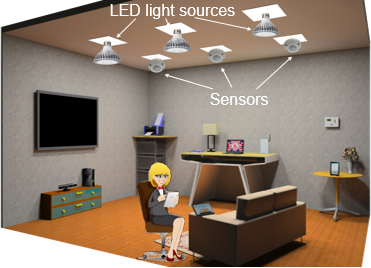

Background: With advanced sensors, processors and state-of-the-art algorithms, smart rooms are expected to react to occupants’ needs and provide productivity, comfort and health benefits. Indoor localization is a key component of future smart-room applications that interact with occupants based on their locations. Traditional camera-based indoor localization systems use visual information to resolve the position of an object or person. This approach, however, may not be acceptable in privacy-sensitive scenarios since high-resolution images may reveal room and occupant details to eavesdroppers. One way to address privacy concerns is to use extremely low resolution (eLR) sensors for they capture very limited visual information.

Summary: This project develops a framework for privacy-preserving indoor localization using active illumination. We replace traditional cameras with single-pixel visible light sensors to preserve the visual privacy of room occupants. To make the system robust to ambient lighting fluctuations, we modulate an array of LED light sources to actively control the illumination while recording the light received by the sensors. Inspired by [Wang et al., JSSL 2014]’s Light Reflection Model, we proposed a model-based indoor localization algorithm under this system framework. The algorithm estimates the change of albedo on the floor plane caused by the presence of an object. In our mock-up testbed (see below), the proposed algorithm achieved an average localization error of less than 6cm for objects of various sizes. The algorithm proved also robust to sensor noise and ambient illumination changes in both simulation and testbed experiments.

Testbed: We have built a small-scale proof-of-concept testbed (Fig. 2) to quantitatively validate the feasibility of active-illumination-based indoor localization. We used 9 collocated LED-sensor modules on a 3×3 grid on the ceiling and a white rectangular foam board as the floor. Each module consists of a Particle Photon board controlling an LED and a single-pixel light sensor. The testbed is 122.5cm wide, 223.8cm long and 70.5cm wide. As localization targets, we used flat rectangular objects ranging from 1x (3.2cm×6.4cm) to 64x (25.8cm×51.1cm) in area.

The light modulation process proceeds as follows:

- turn on one LED, and keep all others off,

- measure the reflected light intensity from all sensors,

- repeat 1-2 for all the 9 LEDs,

- compute the light transport matrix.

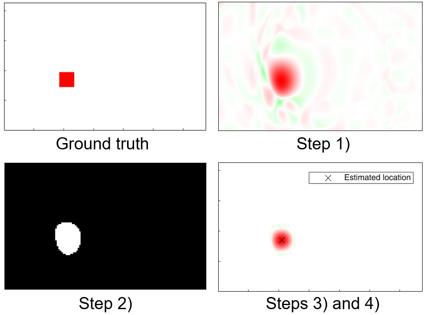

Localization Algorithm: We developed an active-illumination-based indoor localization algorithm using the light transport matrix obtained from light modulation. The entries of a light transport matrix are the relative amounts of reflected light from each LED to each sensor. The light transport matrix is determined by room geometry, sensor and source placements and floor albedo distribution, and is unaffected by ambient light. We estimate the change in the room state (e.g. from empty to occupied) from the change in the light transport matrix. The process is as follows:

- solve for albedo change map using ridge regression – coarse localization,

- threshold the map from step 1) to find a focus area most likely to include an object,

- solve for albedo change map in the focus area – fine localization,

- location estimate: centroid of map from step 3).

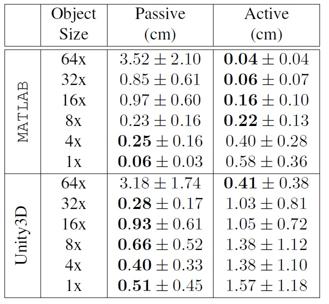

Experimental Results: We tested the performance of our localization algorithm in both simulated and real-world experiments. The simulations are performed in MATLAB and Unity3D, a video game development environment, and the real-world experiments are performed in our testbed.

We compared our algorithm with localization based on passive illumination. In passive illumination, all light sources are not controllable and produce constant light. The localization errors from simulations are shown in Table 1.

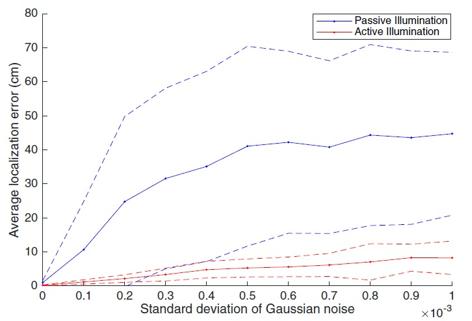

The active and passive illumination algorithms both work well in ideal simulations. However, when we add noise to the sensor readings in simulations, the performance of the passive illumination algorithm drops significantly while the active illumination algorithm does not (Fig. 4).

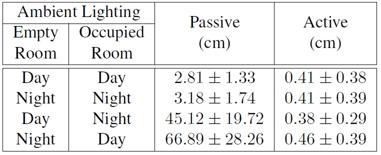

Also, when we simulate an ambient illumination change between the empty and occupied room states, the passive illumination algorithm fails while the active illumination algorithm still works well (Table 2).

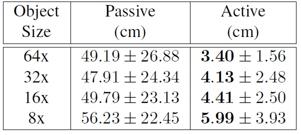

In the real testbed, the active illumination algorithm maintained good performance and achieves an average localization error of less than 6cm for object sizes from 8x to 64x. The passive illumination algorithm has an average localization error of around 50cm because of its sensitivity to sensor reading noise. Results are shown in Table 3.

For additional results and details on the experiments, please refer to our paper [2].

Publications:

- J. Zhao, P. Ishwar, and J. Konrad, “Privacy-preserving indoor localization via light transport analysis”, In IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP), pp. 3331–3335, 2017.

- J. Zhao, N. Frumkin, J. Konrad, and P. Ishwar, “Privacy-preserving indoor localization via active scene illumination”, In IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), CV-COPS Workshop, 2018.