Privacy-Preserving, Indoor Occupant Localization Using a Network of Single-Pixel Sensors

Team: Douglas Roeper, Jiawei Chen, Natalia Frumkin, Janusz Konrad, Prakash Ishwar

Funding: National Science Foundation (Lighting Enabled Systems and Applications ERC) and Boston University Undergraduate Research Opportunities (UROP) Program.

Status: Ongoing (2016-…)

Summary: This project aims to propose approach to indoor occupant localization using a network of single-pixel, visible-light sensors. In addition to preserving privacy, our approach vastly reduces data transmission rate and is agnostic to eavesdropping. We develop two purely data-driven localization algorithms and study their performance using a network of 6 such sensors. In one algorithm, we divide the monitored floor area (2.37m×2.72m) into a 3×3 grid of cells and classify location of a single person as belonging to one of the 9 cells using a support vector machine classifier. In the second algorithm, we estimate person’s coordinates using support vector regression. In cross-validation tests in public (e.g., conference room) and private (e.g., home) scenarios, we obtain 67-72% correct classification rate for cells and 0.31-0.35m mean absolute distance error within the monitored space. Given the simplicity of sensors and processing, these are encouraging results and can lead to useful applications today.

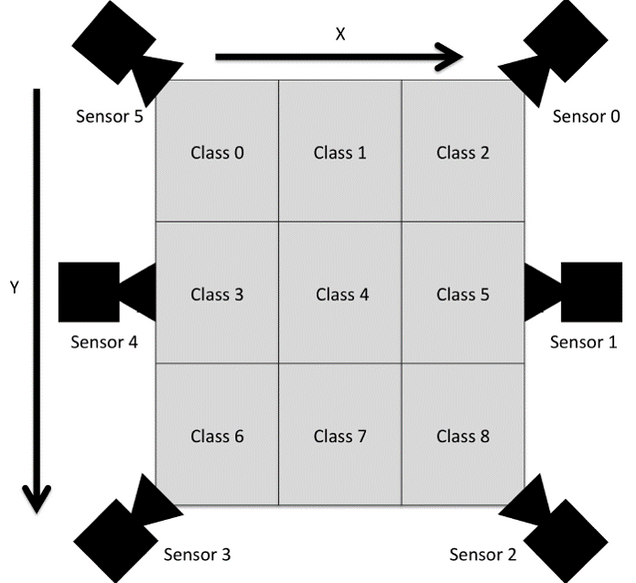

Testbed: We use six TCS3472 color sensors from AMS AG in a configuration depicted in Fig. 1. All sensors are mounted overhead on an aluminum scaffolding and pointed downward. The floor area being monitored has dimensions 2.37m×2.72m. Each sensor’s lens limits its field of view to about 36∘. In order to train our localization algorithms and evaluate our system’s performance, we captured ground-truth data using OptiTrack, a commercial motion capture system.

Localization Algorithm: We develop two purely data-driven (learning-based) localization algorithms. The first is a coarse localization algorithm that classifies a subject’s location as belonging to one of the 9 cells arranged in a 3×3 grid (Fig. 1) using a support vector machine (SVM) classifier. The algorithm provides, at each time instant, an estimate of the cell in which the subject is supposedly located. The second localization algorithm uses support vector regression (SVR) to provide, at each time instant, fine-grained real-valued estimates of the true x and y positions of the subject. We use concatenated luminance values from all 6 sensors as features.

Dataset: We collected a dataset of locations of 4 people. Each person took four separate walks of about 90 seconds within a 2.37m × 2.72m floor area in the field of view of the sensors. We performed trimming on both sensor data and OptiTrack data to make each walk has 971 samples.

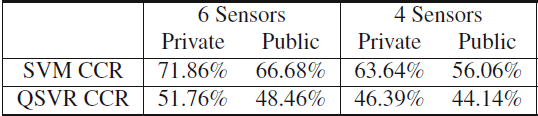

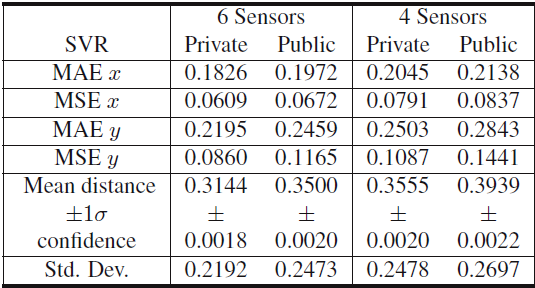

Experimental Results: We evaluated performance with two primary usage scenarios. The first usage scenario considers a public setting, such as a conference room, where the system cannot be trained on all subjects (new subjects, never seen by the system, may enter). The second usage scenario considers a private setting, such as a home, where the system is being used primarily by the same set of people and thus may be trained on all users. In addition, in order to test how sensitive the performance is to the number of sensors, we repeated all the above experiments using only the 4 corner sensors (sensors 0, 2, 3, and 5) and compared them to the results obtained using all 6 sensors. The classification and regression results are shown in Tables 1 and 2 below, respectively.

For additional results and details on our methodology, please refer to our paper below.

Publications:

- D. Roeper, J. Chen, J. Konrad, and P. Ishwar, “Privacy-preserving, indoor occupant localization using a network of single-pixel sensors,” in Proc. IEEE Int. Conf. Advanced Video and Signal-Based Surveillance, Aug. 2016.