Improved Indoor Clutter Classification from Images

Team: Z. Sun, J. Muroff (School of Social Work), J. Konrad

Status: Ongoing (2022-)

This project is a multidisciplinary collaboration between the College of Engineering and the School of Social Work at Boston University.

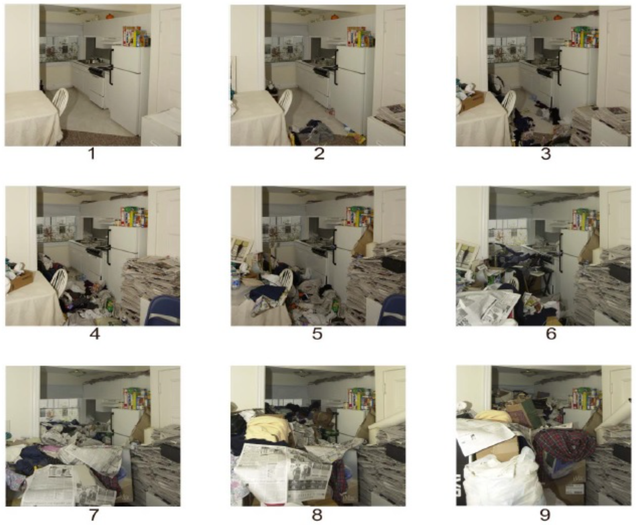

Background: Hoarding disorder (HD) is a complex and impairing mental-health and public-health problem characterized by persistent difficulty and distress associated with discarding ordinary items regardless of their value and resulting in clutter in the living space. In severe cases, hoarding poses health risks, including fires, falls, and poor sanitation. In general, the quality of life of a person with HD is markedly, adversely affected, and family relationships are often strained. In the United States, the prevalence of HD is about 5% of adult population and is a serious social issue. HD symptoms are typically assessed using self-report and clinical interview instruments. Given the visual aspect of HD, the Clutter Image Rating (CIR) scale was developed by R.O. Frost, G. Steketee. D.F. Tolin and S. Renaud. This pictorial instrument includes sets of 9 “clutter-equidistant” photos for each of 3 rooms (bedroom, living room, kitchen) that are used to rate clutter severity (see image below). Ratings may be provided by clients, practitioners, family members, etc., and, therefore, may be biased.

Clutter Image Rating scale for kitchen (G. Steketee and R.O. Frost, “Treatment for Hoarding

Disorder: Assessing Hoarding Problems” Copyright © 2013 by Oxford University Press).

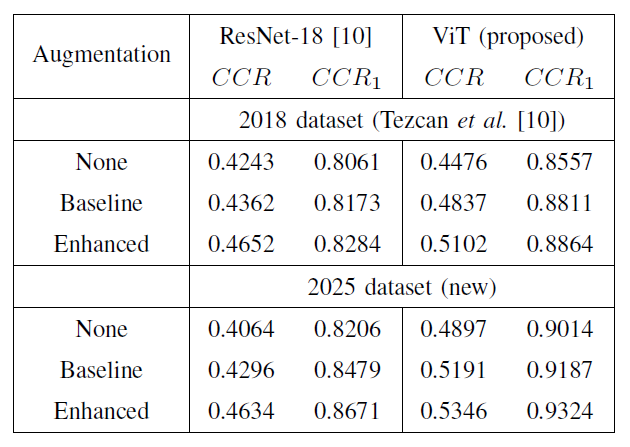

Summary: In the last decade, several automated methods have been developed for objective CIR assessment, such as based on SVM and ResNet-18. Here, we propose an improved method based on the Visual Transformer. We also propose enhanced data augmentation due to relatively few hoarding images available. While, ideally, the goal is to classify clutter as a single integer value between 1 and 9, trained professionals admit assigning CIR values within ±1. Therefore, we define the CIR assessment problem as multi-label, rather than single-label, image classification and use a modified Correct Classification Rate (CCR) to assess its performance. This new performance measure, called CCR1, rates all CIR values within ±1 off the ground truth as correct ones.

Enhanced data augmentation: Due to a relatively small dataset size, earlier works applied data augmentation by means of random horizontal and vertical image shifts by 5, 10, or 15 pixels, and a horizontal “flip”. However, the maximum shift of 15 pixels is very small even for 224 x 224 images, so very little visual information (clutter) is changed. To allow more significant visual “jitter”, we increase the maximum range of shifts to ±30 pixels while keeping 5-pixel increments. We also apply a horizontal “flip”. Furthermore, since pictures of clutter are taken at a variety of angles (frequently not aligned with room features, e.g., door and window frames, room corners), we add an additional geometric augmentation by means of random image rotation by up to ±9 degrees in 1-degree increments. Finally, because of the diversity of cameras used as well as a wide range of possible illumination conditions, we also apply color-jitter augmentation. This increases data diversity by randomly altering the visual attributes of images, such as brightness and contrast, as well as color saturation and hue, thereby aiding the model in better generalizing to unseen data. Examples of such augmentations can be found in the Master’s thesis listed below.

Visual Transformer (ViT) approach: We adapt the ViT architecture [Dosovitskiy et al., 2021] to our clutter classification problem. We resize all images to 224 x 224 pixels, and divide each image into 16 x 16 patches (blocks). We map each patch to a 256-long vector by means of a fully-connected layer. We pass the resulting 196 x 256 matrix to the Transformer Encoder along with positional encoding (learnable 1-D embedding) of each patch. Another learnable embedding is prepended to convey image information to transformer output and then to MLP head. Subsequent encoding operations are identical to those in the original Transformer model developed for language applications [Vaswani et al., 2017]. We feed the output of the Transformer Encoder into a simple MLP (single fully-connected layer) with a 9-class output to allow CIR classification. Since ViT is a large model (330MB in our adaptation), training it from scratch with a relatively small image dataset is counterproductive. Instead, we employ transfer learning and use vit_base_patch16_224 model from the timm library initialized with weights pre-trained on ImageNet. We fine-tune the MLP head using our dataset while keeping the transformer unchanged.

New hoarding-image dataset: HINDER-2025 is a new dataset of indoor images of clutter related to hoarding that has been collected and rated for CIR by health professionals in order to support this research. Details regarding the dataset and how to download are available here: HINDER-2025.

Experimental Results: In all experiments, we used a loss function proposed in [Tezcan et al., 2018], that allows to balance CCR and CCR1 performance. We trained and tested our ViT-based CIR classifier on the HINDER-2025 dataset by means of 4-fold cross-validation, and ran each testing scenario 10 times, each time over 50 epochs, and computed average CCR and CCR1 from the highest respective values in the last 10 epochs. The table below compares performance of the proposed ViT-based image-clutter classification with that of ResNet-18 [Tezcan et al., 2018] for the original HINDER-2018 dataset and the new HINDER-2025 dataset, with no augmentation, baseline augmentation developed in [Tezcan et al., 2018] and enhanced augmentation as described above. The proposed enhanced augmentation improves performance by 1.55-3.90% in terms of CCR and by 0.53-1.92% points in terms of CCR1 across datasets and methods. The proposed ViT-based image-clutter rating method outperforms ResNet-18 on both datasets and for all variants of augmentation by 4.50-7.12% points in CCR and by 5.80-6.53% points in CCR1. Importantly, the new algorithm achieves CCR1 of over 93% with CCR exceeding 53% on the new dataset. This suggests that algorithmic clutter rating close in performance to ratings by health professionals is within reach.

Conclusions: Automatic CIR estimation can be a valuable tool in HD treatment. It removes the need for human-based scoring (cost, bias, repeatability issues), thus simplifying assessment and monitoring of treatment progress while facilitating real-time feedback. The method proposed here is a promising step in this direction.

Publications:

- Z. Sun, J. Muroff, and J. Konrad, “Classification of indoor clutter from images: Application to hoarding assessment,” in 33rd European Signal Processing Conference (EUSIPCO-2025), Palermo, Italy, Sept. 2025.

- Z. Sun, “Image-based classification of hoarding clutter using deep learning,” Master’s thesis, Boston University, May 2024.